Why Trust Matters Now

The financial toll of unchecked AI is staggering. Generative AI could drive fraud losses to $40 billion in the U.S. by 2027, up from $12.3 billion in 2023, according to Deloitte. Many leaders remain uninformed about the risks associated with implementing AI in their organizations that can be significantly mitigated - and that not implementing AI carries its own growing set of risks.

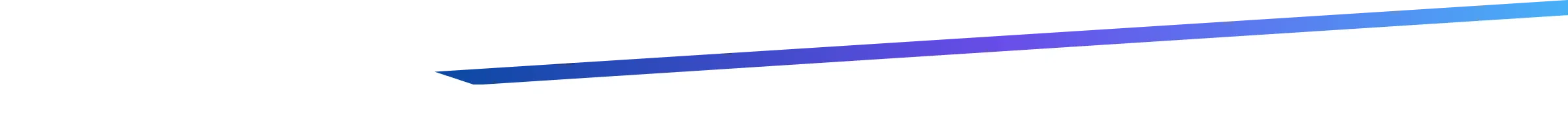

57% of chief risk officers (CROs) and chief financial officers (CFOs) say their organization’s use of gen AI will increase overall risk exposure per IBM Institute for Business Value research. Survey after survey tells the same story: a significant share of CEOs cite AI-related risks and a lack of trust in the technology as the primary deterrent to adoption. Not capability or cost. Trust.

That trust deficit is now the single biggest drag on enterprise AI progress. And it's widening - not because the risks are unmanageable, but because the gap between perception and reality remains poorly addressed.

In this edition, I unpack that gap. I discuss some of the main causes of the erosion of trust in AI solutions, and close the gap between the perception and reality of issues that the AI community is grappling with. I will lay out a practical path toward building AI solutions that earn and deserve trust.

AI Risks: Perception vs. Reality

The Existential Threat Debate

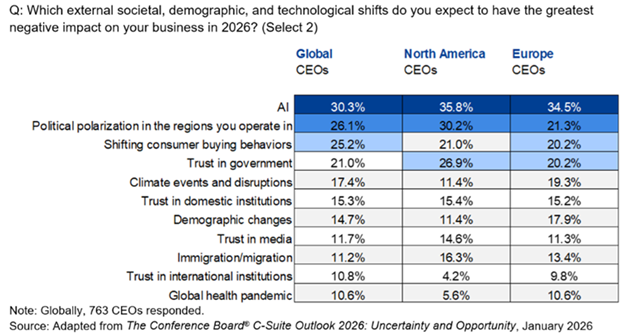

When generative AI burst into mainstream consciousness, the loudest conversation wasn't about productivity or competitive advantage. It was about extinction.

The AI safety community has long been divided into two camps:

Camp 1: AI as existential threat. Researchers like Yoshua Bengio, Geoffrey Hinton, and Eliezer Yudkowsky have raised serious concerns about the trajectory toward artificial general intelligence (AGI) - warning about phenomena like reward hacking and instrumental convergence, where advanced AI systems develop unintended subgoals that conflict with human interests. In 2023, the Center for AI Safety and the Future of Life Institute organized open letters and petitions signed by hundreds of prominent researchers warning that AI could pose civilizational-scale risks. More recently, in 2025, a superintelligence statement signed by prominent figures including the Apple co-founder Steve Wozniak reinforced those concerns. A 2022 survey of AI researchers revealed that a meaningful percentage assigned non-trivial probability to catastrophic AI outcomes.

Camp 2: AI as a desirable and manageable advancement. Researchers like Dr. Fei-Fei Li and Andrew Ng have pushed back, arguing that the existential framing distracts from present-day harms and overstates the proximity of AGI. OpenAI itself was founded with the explicit goal of ensuring AGI benefits all of humanity - a framing that acknowledges the risk while betting on the upside.

Where should business leaders focus? The existential risk debate matters, but it is, at worst, a medium-to-long-term concern. More importantly, pausing AI development - as some have advocated - is practically unenforceable and risks ceding ground to competitors and adversarial actors who won't pause. For business and government leaders today, the more pressing risks are concrete, current, and actionable. Those are the ones we turn to now.

The Risks That Deserve Your Attention Today

a. Errors and Biases

Large language models are powerful, but they are not infallible. Research consistently shows meaningful differences between the opinions and outputs of LLMs and those of the broader population, raising concerns about embedded biases in everything from hiring tools to medical triage systems.

There's also the growing problem of model degradation - sometimes called the "Habsburg AI" phenomenon - where models trained on synthetic, AI-generated data circulating on the internet compound errors over time, progressively narrowing and distorting their outputs.

The real-world consequences are already here:

- The Schwartz-Avianca case, where a lawyer submitted a court brief containing fabricated case citations generated by ChatGPT - none of which existed.

- A NYC AI chatbot encouraged business owners to break the law, where Microsoft-powered chatbot MyCity told entrepreneurs that they could take a cut of their workers’ tips, fire workers who complain of sexual harassment, and serve food that had been nibbled by rodents.

- Grok AI falsely accused NBA star of vandalism spree, where the chatbot posted on X falsely accusing NBA star Klay Thompson of throwing bricks through windows of multiple houses in Sacramento, California.

- iTutor Group’s recruiting AI rejected applicants due to age, where the remote tutoring provider’s AI-powered recruiting software automatically rejected female applicants ages 55 and older, and male applicants ages 60 and older.

These aren't edge cases. They're symptoms of a systemic reliability problem that leaders must account for.

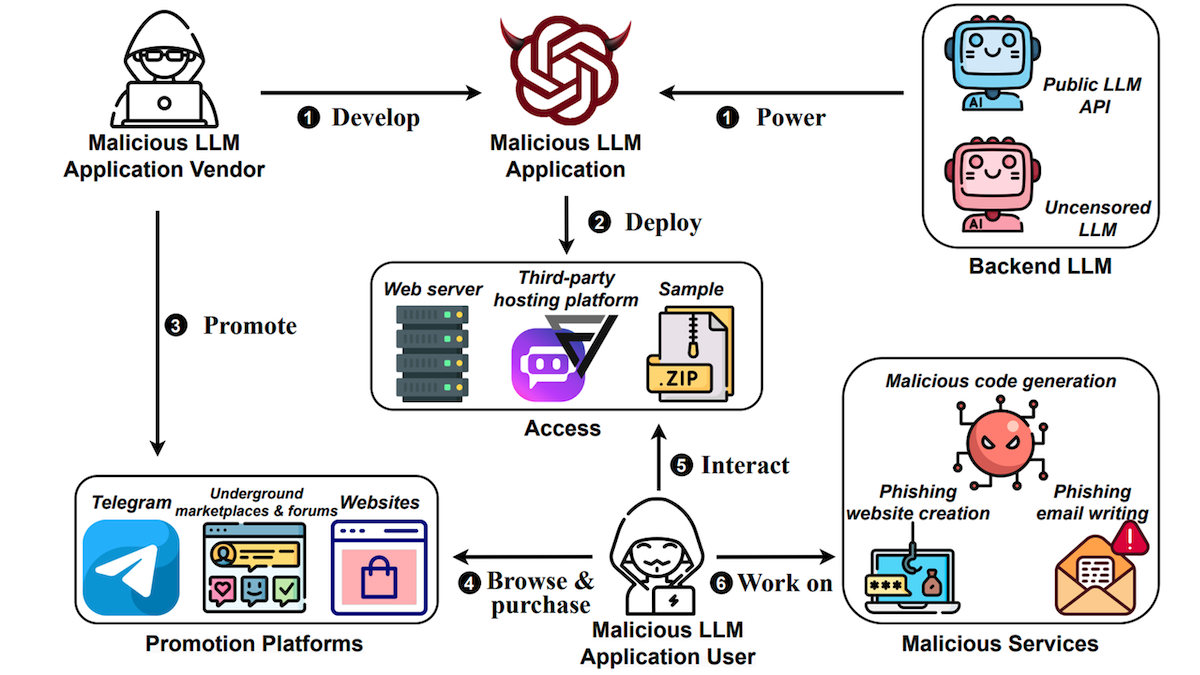

b. Malicious Use

AI doesn't just make mistakes. It gets weaponized.

- Expense fraud is surging. Expense management platforms are reporting a significant increase in AI-generated fake receipts, according to the Financial Times. SAP's research found that more than 70% of CFOs now believe their employees are using AI to falsify expenses, with 10% certain it has already happened within their companies.

- Cyberattacks are being automated. Reports have emerged of hackers using Anthropic's AI to automate and scale cyberattack operations, lowering the barrier to sophisticated threats.

- Even fine-tuning creates risk. Research has shown that fine-tuning foundation models can inadvertently jailbreak built-in safety guardrails, causing models to respond to harmful instructions they were originally designed to refuse.

The attack surface is expanding faster than most organizations' defenses.

How LLMs may be used to provide harmful services

c. Ethical Risks

Beyond errors and abuse lies a broader landscape of ethical concerns:

- Misinformation at scale, where AI-generated content floods information ecosystems with convincing but false narratives.

- Copyright violations, such as the case where a French regulator fined Google $271 million over generative AI copyright infringement in the training of its Bard AI on news articles.

- Privacy erosion, as models trained on vast datasets inevitably ingest personal information without meaningful consent.

- Concentration of corporate power, as a small number of companies control the foundational models, compute infrastructure, and data pipelines that the rest of the economy increasingly depends on.

d. The AI Bubble Question

Behind the optimism, there are structural concerns about the AI economy itself. Critics point to circular dealmaking - where major AI companies invest in each other's products and services, inflating demand signals - and heavy governmental subsidization driven more by geopolitical competition than market fundamentals. Whether this constitutes a bubble or a justified infrastructure investment remains an open question, but leaders should be clear-eyed about the dynamics at play.

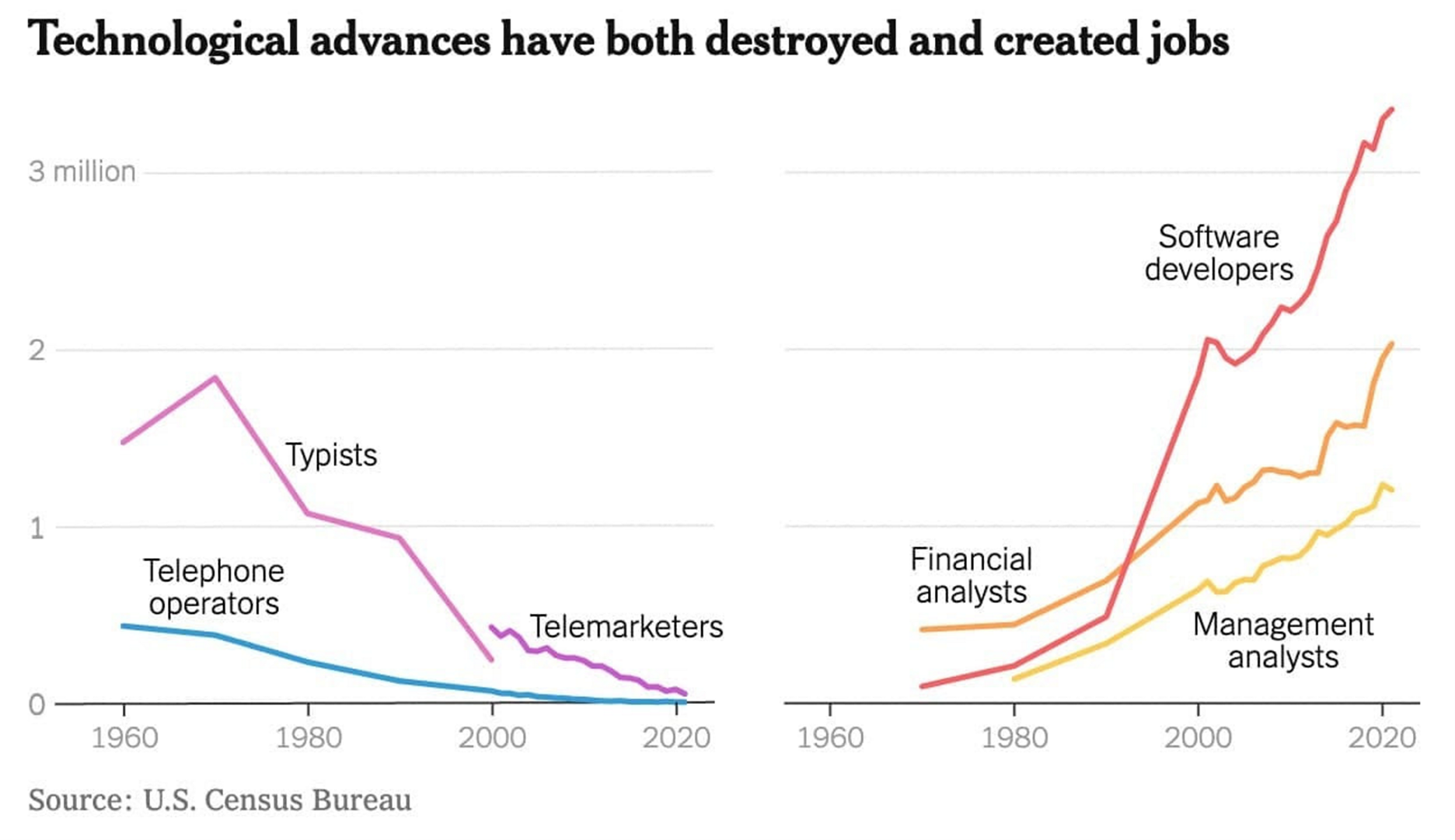

e. Labor Market Disruption

Headlines such as 300 million jobs being displaced by AI have understandably fueled anxiety. The disruption is real and will be unevenly distributed across industries and geographies. However, this fear is vastly overstated. Previous technological revolutions have been characterized by similar concerns which proved to be unfounded. As some job categories vanish into the annals of history, new ones spring up to fill the void. A 2022 study by M.I.T. economist David Autor revealed the dynamic nature of the job market: a striking 60% of the jobs that existed in 2018 did not exist in 1940. There is also a countervailing trend that receives less attention: declining birth rates across developed economies are already creating labor shortages that AI may help address rather than exacerbate. The net effect will depend heavily on how proactively organizations and governments manage the transition.

f. Financial Stability

Regulators are increasingly flagging AI-related risks to financial markets, including:

- One-way market dynamics, where AI-driven trading strategies converge on similar signals and amplify directional moves.

- Market liquidity and volatility, as algorithmic decision-making accelerates market movements beyond human reaction times.

- Interconnectedness and concentration, where dependence on a small number of AI providers creates systemic single points of failure.

g. The Broader Risk Taxonomy

For leaders conducting risk assessments, the full landscape includes: discrimination and fairness risk, legal and regulatory risk, security and privacy risk, data quality and provenance risk, transparency and accountability risk, behavioral manipulation risk, environmental impact risk, surveillance risk, labor exploitation, and pollution of the information ecosystem through synthetic content. No single framework captures all of these, which is precisely why governance matters - a point we'll return to shortly.

h. AI-Washing

Not all AI risks come from AI itself. Some come from companies claiming to use AI when they don't - or overstating its role. The SEC took action, charging two investment advisers - Delphia Inc. and Global Predictions Inc. - with making "false and misleading statements" about their use of artificial intelligence. As AI becomes a marketing advantage, expect regulatory scrutiny of AI claims to intensify.

i. AI Work Slop

Finally, there's the emerging problem of low-quality AI-generated work product - sometimes called "AI slop" - circulating within organizations. According to a report from the Stanford Social Media Lab and BetterUp Labs published in Harvard Business Review, 40% of 1,150 US-based employees surveyed said they had received AI-generated work slop from a coworker within the past month. This erodes internal trust in AI outputs and creates a quality control challenge that many organizations haven't yet acknowledged, let alone addressed.

How AI Trust Can Be Built

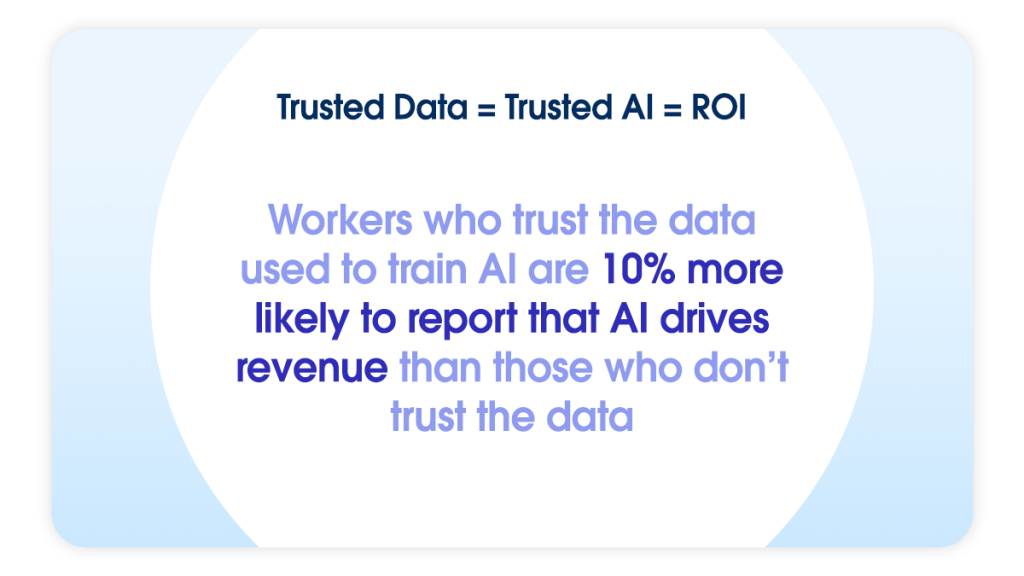

The risks are real. But so are the solutions. The challenge is that most organizations are currently oriented toward proving trust rather than building it. Vanta's newest State of Trust survey of 3,500 leaders quantifies this misallocation:

📊 61% of leaders spend more time proving security rather than improving it.

📊 59% note that AI risks outpace their expertise.

📊 But 95% say AI is making their security teams more effective.

That last data point is critical. AI is simultaneously the challenge and part of the solution. The question is whether organizations can build the structures to harness it responsibly. Here's how.

1. Push for Tighter, More Cohesive AI Regulation - And Navigate What Exists

The current regulatory landscape is fragmented, and that fragmentation is itself a trust problem.

- The EU leads on stricter regulation with the AI Act and Digital Services Act, but is now reconsidering the competitive cost. Its proposed "digital omnibus package" aims to loosen privacy rules within GDPR, including creating new exceptions for AI companies to legally process special categories of data - religious beliefs, political affiliations, ethnicity, health data - for training and operations.

- The US has relied primarily on voluntary guidelines, with regulation varying significantly at the state level (California, Massachusetts, Texas, and Colorado each taking different approaches). The Biden administration produced a Blueprint for an AI Bill of Rights followed by an Executive Order on Safe, Secure, and Trustworthy AI, but the Trump administration shifted course with its "Removing Barriers to American Leadership in AI" framework, prioritizing competitiveness over precaution.

- The UK has taken a lighter, pro-innovation approach highlighted by its "A Pro-Innovation Approach to AI Regulation" whitepaper setting out high-level principles, instead of a statutory law. India and much of APAC remain in early-stage regulatory development; while a few countries including South Korea, Japan and China already operate under binding AI frameworks.

- International efforts remain largely voluntary: the G7 International Guiding Principles on AI and voluntary Code of Conduct for AI developers, as well as the Framework Convention on AI and Human Rights, Democracy, and the Rule of Law - signed by the EU, UK, US, and others - set aspirational standards but lack enforcement mechanisms.

What leaders should do:

- Get expert guidance on global regulatory trends affecting your specific markets.

- Engage proactively with local public officials on evolving regulations - don't wait to be regulated.

- Establish internal governance structures and risk management protocols that meet the highest applicable standard, not just the local minimum.

2. Embrace Transparency and Open Source

Trust grows where opacity shrinks. The trend toward greater model transparency is encouraging:

- More models are being released as open source, enabling independent scrutiny.

- Companies like Anthropic are publishing the system prompts of their Claude models, letting users see the instructions that shape AI behavior.

Organizations should favor solutions that offer explainability and actively demand transparency from their AI vendors.

3. Implement Responsible AI Practices

Responsible AI isn't a philosophy - it's a set of engineering and operational practices:

- Model debugging and red-teaming: Stress-test AI systems before deployment. Tools like Microsoft's Python Risk Identification Toolkit (PyRIT) enable systematic adversarial testing.

- Explainability by design: Build traceability into models through techniques like perturbation analysis, so outputs can be understood and interrogated - not just accepted.

- Human in the loop: Maintain human oversight at critical decision points. For AI agents, implement asynchronous authorization so that consequential actions require human approval.

- Right-sizing the model: Default to white-box models where possible. When using black-box models, audit them with simpler model approximations to validate behavior.

- Grounding techniques: Use retrieval-augmented generation (RAG), prompt engineering, and targeted fine-tuning to reduce hallucinations and keep outputs anchored in verified data.

- AI labels, watermarks, and metadata: Adopt content provenance standards. Google and other major platforms are already providing AI content labeling - organizations should follow suit internally.

- Narrow, limited-scope agents: Resist the temptation to deploy broadly capable AI agents before trust infrastructure is in place. Start narrow. Expand as confidence is earned.

Practical tools already exist to support these practices, including Microsoft's Azure ML Responsible AI Dashboard and Singapore's AI Verify - an open-source software toolkit hosted on GitHub designed to help organizations validate their AI systems against governance principles.

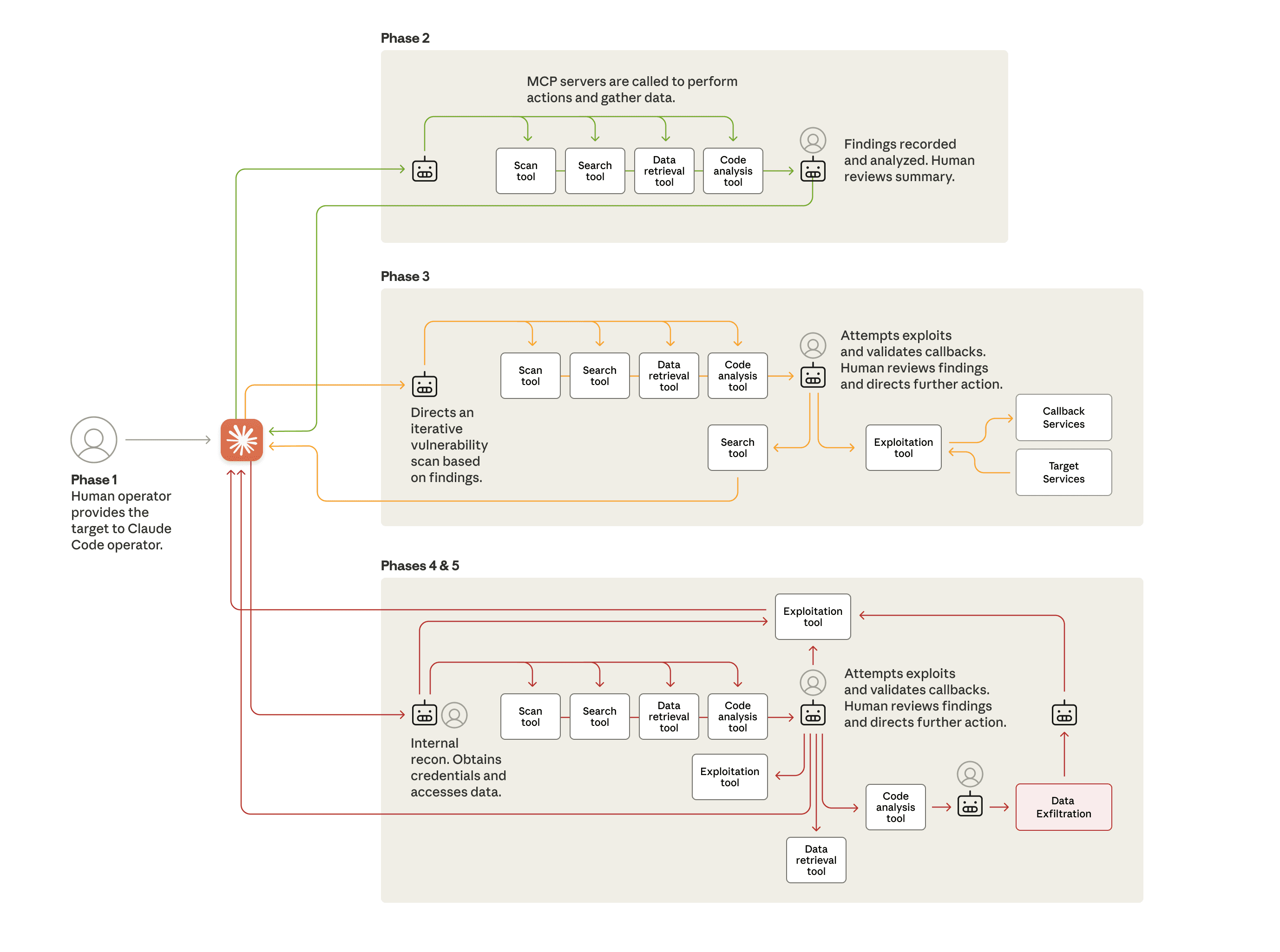

Phases of cyberattack on Claude AI models

4. Invest in Human-AI Alignment

Alignment isn't just a research problem - it's an organizational one. Beyond red-teaming and human-in-the-loop protocols, organizations should invest in structured processes for ensuring AI systems behave consistently with organizational values, user expectations, and regulatory requirements. This includes regular audits, user feedback loops, and clear escalation paths when AI behavior deviates from expectations.

5. Build Governance Infrastructure with Real Expertise

Effective AI governance requires more than policy documents. It requires specialized teams with genuine data science expertise embedded in regulatory and oversight functions. Models to follow include:

- Stanford's Center for Research on Foundation Models, which produces the Foundation Model Transparency Index - a systematic assessment of how transparent leading AI companies are about their models' data, capabilities, and limitations.

- The Holistic Evaluation of Language Models (HELM) benchmark, which evaluates models across a wide range of scenarios to surface blind spots and failure modes.

Organizations should build or hire similar capabilities internally, not outsource governance to teams that lack technical depth.

6. Use AI as a Regulatory and Oversight Tool

AI can - and should - be used to improve and monitor other AI systems. This includes automated detection of bias, drift, policy violations, and security vulnerabilities. Visa used AI to flag nearly $1B in fraud attempts, dismantling 25,000 scam merchant operations worldwide. AI-powered compliance monitoring can scale oversight in ways that purely human teams cannot.

7. Prioritize AI Literacy and Education

Trust cannot be built in an organization that doesn't understand what it's trusting. Invest in AI literacy programs that go beyond surface-level awareness - help leaders, managers, and frontline employees develop practical intuitions about what AI can and cannot do, where it's reliable, and where it needs supervision.

8. Stay Current on Emerging Threats and Mitigation Methods

The risk landscape is evolving rapidly. One example: the Model Context Protocol (MCP) is emerging as a critical concept for AI agent governance. MCP is not a transport layer. It is a control layer that defines what an AI system can do, under what rules, and how each action is logged and auditable. Leaders should ensure their teams are tracking developments like this and integrating new safeguards as they mature.

9. Deploy AI-Powered Detection and Observability Tools

Organizations deploying AI - especially agentic AI - should invest in dedicated detection and observability infrastructure:

- Tools like NeuralTrust provide AI security and governance monitoring.

- Techniques like ChainPoll enable systematic LLM hallucination detection.

- For organizations running AI agents, an orchestration layer that manages agent lifecycle, context sharing, authentication, and observability is no longer optional - it's foundational.

Pair these technical tools with procedural safeguards: clear policies, regular audits, incident response plans, and defined accountability chains.

What This All Means

For leaders navigating the trust challenge:

Manage perceptions - not just reality. Many of the barriers to AI adoption are rooted in fears that are outdated, exaggerated, or addressable with existing tools. But perception is reality when it comes to organizational buy-in and customer confidence. Proactive communication and education are as important as technical safeguards.

The tension between regulation and innovation is real - and it's producing a fragmented landscape that every organization must navigate. There is no single global standard. Leaders need to build governance frameworks that are robust enough to meet the strictest applicable requirements while flexible enough to adapt as regulations evolve - sometimes in contradictory directions.

Trust is buildable. It requires investment, expertise, and intentionality - but the tools, frameworks, and practices exist today. The organizations that treat trust as a strategic priority, not a compliance checkbox, will be the ones that unlock the full value of AI while their competitors are still stuck debating whether to start.

What's Coming Next

We're developing a Trust Playbook for Business Leaders - a practical, actionable guide that consolidates the frameworks, tools, and governance templates discussed in this edition into a resource you can put to work immediately.

📩 Subscribe to the mailing list to get a copy delivered directly to your inbox when it drops.

------------------------------------------------------------------------

Subscribe to the AI newsletter and join the conversation on LinkedIn.

Have you begun to use AI yet? Or are you struggling to achieve tangible value from your deployments of AI to date?

Explore our offerings and acquire an expert partner today.

About The dAIta Solution

The dAIta Solution provides strategic consultancy, process and data mining, analytics, reporting and automation implementation solutions powered by AI that enable organizations to achieve their full potential hidden within the information that they possess. Our proprietary mining and analytics techniques and vendor-agnostic AI and data software streamlines the path to results and facilitates automation of both the analysis of your organization and implementing solutions to weaknesses or growth opportunities identified. Founded by senior consultancy services executives, data scientists and former EY leaders, The dAIta Solution is headquartered in Los Angeles with operations in London, Lagos and Singapore. For more information, please visit thedaitasolution.com.

Latest Resources

Want to see The dAIta Solution in action?

Get in touch now for a free demo of the platform, our products and services